OpenAI, a U.S. firm, has once again shocked the world with GPT-4, its most recent AI model. This big language model can comprehend and create innovative and significant language. It will fuel an enhanced version of the company’s popular chatbot, ChatGPT. GPT-4 is currently available to try with a premium subscription or by getting on OpenAI’s waitlist.

GPT-4 and what it can do

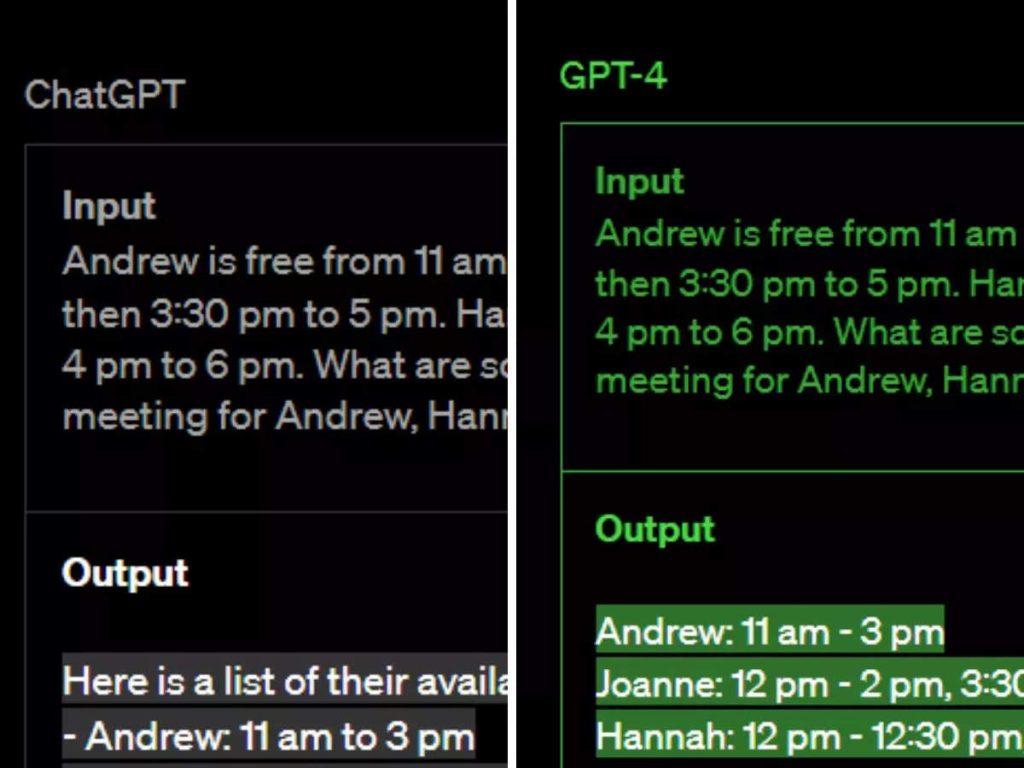

Compared to GPT-3.5, the technology that first drove ChatGPT, GPT-4 is a notable advancement. GPT-4 is more imaginative and chatty. Its ability to concurrently receive text and image input and take both into account while drafting a response is its most significant invention. For instance, if given a picture of any ingredients and asked, “What can we make from these?” A collection of recipes and meal suggestions is provided in GPT-4. According to the model, ” amusing images can convey a variety of human feelings.” It means that the model is able to understand human emotions. Those who are blind are already benefiting from its ability to explain images in the best manner possible.

- Advertisement -

While GPT-3.5 struggled with big instructions, GPT-4 can contextualize up to 25,000 words, which is an improvement that is eight times higher than the previous model. GPT-4 went through various tests that was mainly built to examine humans. As result, GPT-4 succeeded in performing much better than average. For instance, it earned in the top 90% on a mock bar test while its precursor only managed to reach the lowest 10%. Additionally, GPT-4 did exceptionally well in advanced classes in environmental science, statistics, art history, biology, and economics.

Aptitude Test of GPT-4

GPT-4, however, performed poorly in intermediate English Language and Literature, receiving a 40% overall score. However, it outperforms other high-performing language models in English and 25 other languages, including Punjabi, Marathi, Bengali, Urdu, and Telugu, in terms of language understanding. Almost immediately after its introduction, Chat GPT-generated text began to appear in college assignments and other written tasks. Its influence is now endangering examinations and education systems.

OpenAI has revealed its early data demonstrating that GPT-4 can perform a significant amount of white-collar tasks, particularly programming and writing tasks, while mostly avoiding industrial and scientific tasks. Greater use of language models will impact public affairs and the economy.

If we describe intelligence as “a very general mental capability that, among other things, involves the ability to reason, plan, solve problems, think abstractly, comprehend complex ideas, learn quickly, and learn from experience.” GPT-4 meets four of the seven requirements. It has yet to fully grasp master planning and self-learning abilities. If it happens to master all the abilities then who knows it might be one step ahead of humans.

Ethical questions

GPT-4 retains many of its predecessor’s faults. Its output might occasionally be falsely accurate, a phenomenon called “hallucination” by OpenAI. While it is far superior to GPT3.5 in terms of fact recognition, it may still incorporate fictitious information discreetly. Ironically, OpenAI has not been open about how GPT-4 functions internally.

While confidentiality for safety appears to be a reasonable reason, OpenAI is capable of evading critical examination of its model. GPT-4 has been trained on data scraped from the Internet that contains several harmful biases and stereotypes. There is also the notion that a big dataset is varied and accurately represents the world at large.

However, this is not the case on the Internet. Where individuals from fiscally developed nations, youthful people, and men with masculine accents are more prevalent. As of now, OpenAI’s approach to addressing these biases has been to develop a different model to regulate the answers because it believes that curating the training set would be too difficult. The chance that the moderator model is taught to identify only the prejudices we are conscious of, primarily in the English language, is one potential flaw in this strategy. This model may be unaware of non-western cultural assumptions, such as those based on the case.

GPT-4 has a high potential for abuse as a propaganda and misinformation generator. OpenAI stated that it has worked heavily to make it safer to use, such as declining to print objectionable findings, but whether these efforts will prevent GPT-4 from enrolling in “WhatsApp University” remains to be seen.